R.I.P. Explainer Journalism

A week with the New Bing has helped me see the future of answers, and it's full of possibility except if you work for outlets like Vox.

I’ve been playing with Bing’s new chat search for the past week. For those that missed it, Bing has retooled its search function to include the ability to ask it questions rather than straight-up search for links.

I’ve written about this previously. Search as we know it via Google and its ilk is about finding out how to answer your questions. You type in keywords and it serves up links that are supposed to be the most relevant for your quest, but you still have to do the work of sifting through the information and figuring out what is the most relevant for what you want to know.

Imagine, for example, you’re going on a trip to Disneyland next month and want to know the best hotels. Google will serve you up a mix of sponsored ads for hotel booking sites as well as sites that have written about it (though many of them are SEO content farms that dump text on the web and make commission money if you book through the buttons they conveniently give you in their text). You have to do so much evaluation of sources that it takes a lot longer to get an answer, and it has made search feel broken for quite some time. Have you forgotten the question I asked up top? What’s the best place to stay? You’re buried in link swimming.

The idea behind the new Bing, or Google’s coming Bard, or eventually, ChatGPT is that the search result is the answer to your question rather than a bucket full of links and a good-luck wish. Bing combs through the sources it finds and gives you the best result, or the top 5 or 10 if that’s what you ask it. There are questions about Bing’s sourcing, of course (look at how sketchy those sources are!), and how it knows how to judge the good from the self-interested links it finds. But as these technologies mature, the hope is you’ll get answers from search rather than a bunch of paths to investigate. Quality of sources can be upgraded, but the engine is there.

Beyond commercial use, the idea we can get answers from search is intriguing. Much of what we search for, we often want to know about it but don’t really want to do the difficult work of source-checking when the stakes are low (i.e. democracy or our own money isn’t on the line).

That’s where I’m going with today’s post. I’ve got a silly example of Bing search's chatbot, one where the stakes definitely are low. It’s an example of why I think it's a bit more useful for current events answer-seeking than some of its chat search competitors at the moment.

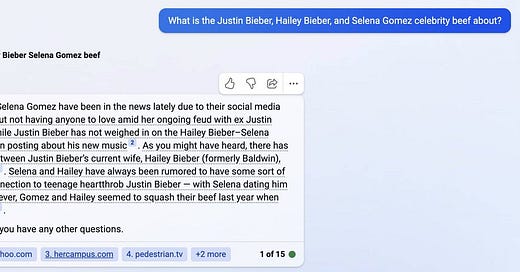

While I was driving last week, a podcast referenced the Justin Bieber / Hailey Bieber / Selena Gomez online feud as if we all know what it is. Which I decidedly do not. I don't follow celebrity news other than what headlines surface in my Twitter feed. This reference was a good example of something I just wouldn't care to Google or even go look up on a news site. But the reference on the podcast was in comparison to something else in the news, and I wanted to understand that thing they were talking about. So I asked Bing search and got this:

So right away, something new. First, the answer from Bing is based on current news. ChatGPT 3's body of knowledge stops with 2021 texts and so it’s not combing the latest news or information. For example, ask ChatGPT 3 why Elon Musk is interested in Twitter and you’ll get an extremely 2021 answer.

But with the Bing result, you get something that’s pulled from the news, and more importantly, unlike ChatGPT you get a list of the sources it’s building the answer from. Useful, and much more transparent.

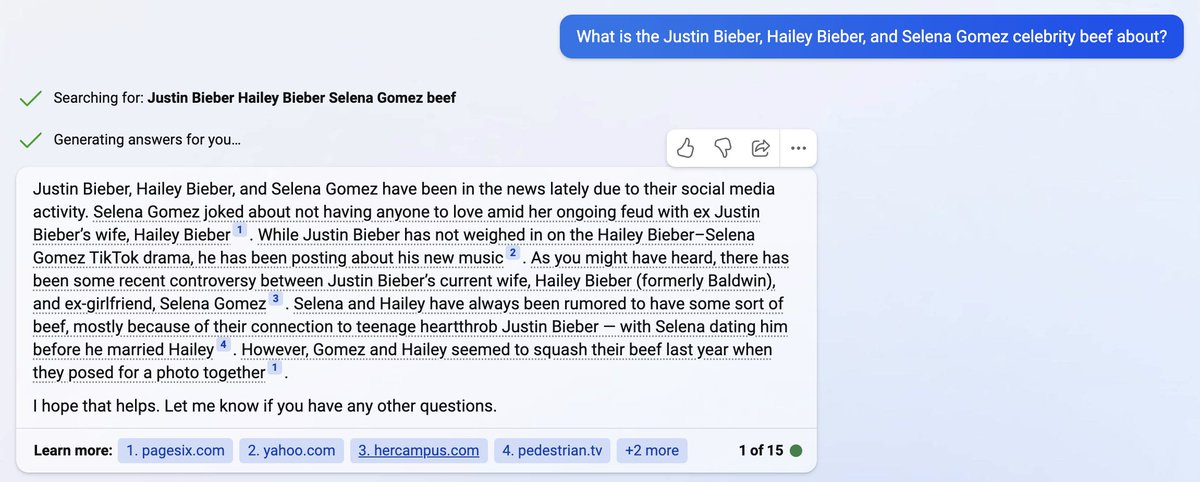

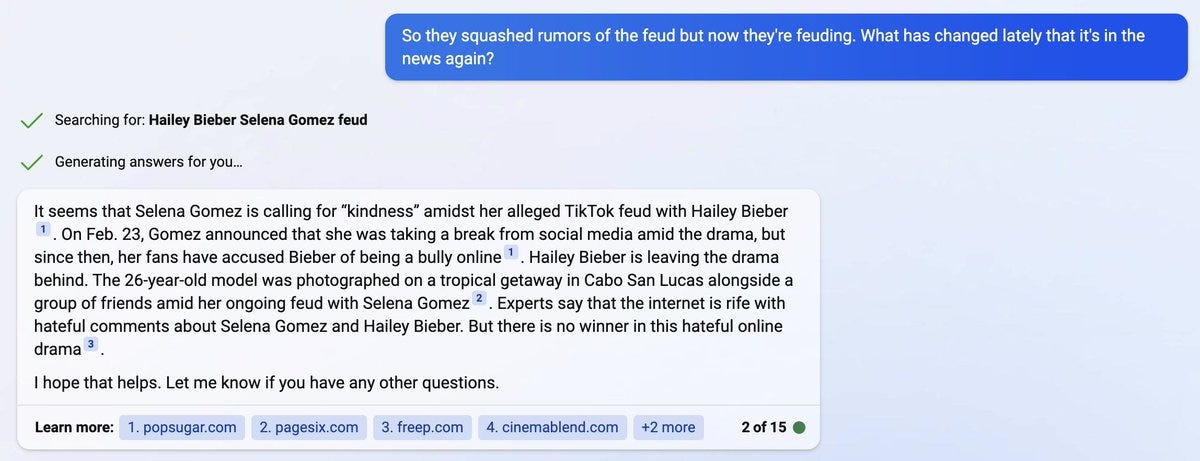

But also, notice in the screenshot above that the answer has limited helpfulness. The opening sentences to the answer are somewhat focused on what I'm asking about, but it's really vague. And then it implies at the end that the feud isn't really a thing. So I ask a follow-up that sort of notes the contradiction in Bing’s original answer and get this:

The follow-up question was targeted, and I got a more specific answer. This is me playing the director role that I’ve written about previously, shaping the responses I get with follow-up questions to dive deeper into the premise behind my question. In this case, I asked why chatter about this feud is surfacing again despite the feud rumors being quashed, making the query much more contextual for current events rather than the whole of online text. I'm asking it to explain, basically, why it surfaced on a podcast this week without mentioning the podcast.

In other words, to explain the news to me. And the answer was much better.

As I said, harnessing AI technologies is going to rely on us being able to ask questions and direct it to the best answer. The computer didn’t know I was asking this question because of something recent, and so it gave me a summed-up version based on years of material. Only by narrowing my question and being more precise with my query was I able to shape it to give me the answer I wanted.

In this case, the "best" answer is one that helps me get what I’m looking for with some degree of precision (which is contextual to how crucial precision is; higher stakes demand more precision). I continue to think that the future is asking questions to shape AI outputs. We will become directors and producers more and more, or at least we'll need to as we automate basic explainers in search. The goal hasn't changed: understanding. But to get there, we have to be precise in our questions, and one of the revealing things about AI is our questions are much vaguer than we think they are. That’s why I often compare talking with an AI to talking with a child. It’s only in their open-minded confusion that we see how much we take for granted that people know what we mean.

Another small example. We were playing with Craiyon image generation in class today and we collectively decided to ask it to give us an image of “a ruby sitting on the Walk Of Fame in Los Angeles.” Here’s what it served up:

In most cases, it decided “ruby” is a woman! We changed the query to “ruby gem” and got something more precise:

Anyhow, back to Bing.

There's something interesting here: asking AI to explain the news based on the questions we’re asking and the answers we seek. So much of news construction is premised on us keeping up with the news. Entering a story five to six days after the original one broke can be a challenge because journalism style is to put the most recent or pressing information first. So you have to read further down for context or search previous stories just to understand the news of the day. You can probably guess what happens. People either don’t do that, so they have a halfway understanding of the news, or they quit the news because it’s so difficult to use.

Explainer journalism has tried to fill this void. It’s a fairly newish form of the craft, popularized by sites like Vox, that don’t break news so much as give you stories that help you get caught up. Many of these sites are premised on giving you all the information and context you need to get caught up. But this is an enormous human effort, very costly to construct and maintain. It requires reporters who are voracious readers and thorough researchers and ones who are skilled enough to write comprehensive explainer pieces. And then, after all that, you have to keep updating the piece as the story evolves, because a dated explainer is useless. It’s time, labor, and economically draining.

Something like this Bing search is eventually going to kill explainer journalism. If you can automate it and have it constantly searching for updates, what was a very expensive human enterprise eventually gets easier and more economical to produce. Still to come are explainers built on better sourcing, because trust is going to be important for some types of news such as politics.

But this example also offers a downside. The initial explanation I got from Bing was ... not great. And the only reason I know this is because I was thinking critically and realized it hadn't really answered what was driving my question. So this is not a great tool for passive use. I also found some of its sourcing sketchy. Other queries could ask for more mainstream sources and name them, allowing the searcher to limit the range of where it’s drawing information from.

We have a lot to do before I would trust this tool consistently, but I can see a big piece of the future now.

Jeremy Littau is an associate professor of journalism and communication at Lehigh University. Find him on Twitter, Mastodon, or Post.